I’ve been busy defining objects in my Unity Catalog metastore to create a secure exploratory environment for analysts and data scientists. I’ve found a lack of examples for doing this in Azure with file types other than delta (maybe you’re reading this in the future and this is no longer a problem, but it was when I wrote this). So I wanted to get some more examples out there in case it helps others.

I’m not storing any data in Databricks – I’m leaving my data in the data lake and using Unity Catalog to put a tabular schema on top of it (hence the use of external tables vs managed tables. In order to reference an ADLS account, you need to define a storage credential and an external location.

External tables

External tables in Databricks are similar to external tables in SQL Server. We can use them to reference a file or folder that contains files with similar schemas. External tables can use the following file formats:

- delta

- csv

- json

- avro

- parquet

- orc

- text

If you don’t specify the file format in the USING clause of your DDL statement, it will use the default of delta.

Below is an example of creating an external table from a single CSV file.

CREATE TABLE mycatalog.myschema.external_table1

USING CSV

OPTIONS (header "true", inferSchema "true")

LOCATION 'abfss://containername@storageaccountname.dfs.core.windows.net/TopFolder/SecondFolder/myfile.csv';Because I have used the LOCATION clause, this is an external table that stores just metadata. This SQL locates the specified file in my data lake and has Databricks create the schema based upon that file instead of me defining each column. Notice that I have specified in the options on the third line that there is a header row in my file and that Databricks should figure out the schema of the table.

Alternatively, I could explicitly define the columns for my external table. You can find the list of supported data types here.

CREATE TABLE mycatalog.myschema.external_table1

(

colA INT,

colB STRING,

colC STRING

)

USING CSV

OPTIONS (header "true")

LOCATION 'abfss://containername@storageaccountname.dfs.core.windows.net/TopFolder/SecondFolder/myfile.csv';External views

I had some JSON data in my lake that Databricks couldn’t automatically convert to a table so I created some external views. My data had a format similar to the below, with each document containing a single array that contained multiple objects, some of which were nested.

{

"mystuff": [

{

"cola": "1",

"colb": "2",

"colc": "abc",

"nestedthing": {

"id": 1,

"name": "thing1"

}

},

{

"cola": "2",

"colb": "4",

"colc": "def",

"nestedthing": {

"id": 22,

"name": "thing22"

}

},

{

"cola": "3",

"colb": "6",

"colc": "ghi"

}

]

}The example view below directly queries a file in the data lake.

CREATE VIEW mycatalog.myschema.external_view1

select

src.cola,

src.colb,

src.colc,

src.nestedthing.id,

src.nestedthing.name

FROM

(

select

explode(mystuff) src

FROM

json.`abfss://containername@storageaccountname.dfs.core.windows.net/TopFolder/SecondFolder/myfile2.json`

) xTo reference the file in the data lake in the FROM clause of the query, we specify the file format first (JSON) followed by a dot and then the file path surround by backticks (not single quotes). If we needed to reference a folder instead we would just end the path at the folder name (no trailing slash is necessary).

The explode() function is great for turning objects in an array into columns in a tabular dataset. To access nested objects, you can use dot notation. If you need to parse more complex JSON, this is a helpful resource.

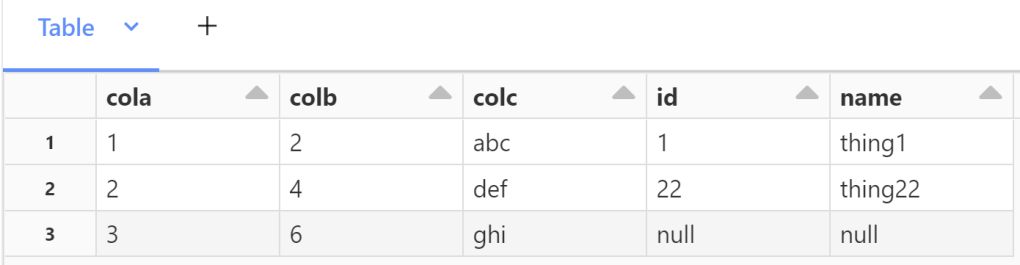

The query from the view above creates the following output.

I’m not sure yet if there are any consequences (performance? security?) of defining a view like this rather than first creating an external table. I couldn’t get the external table created without modifying the JSON files, which I was trying to avoid. I do the view produces the correct results. If you have experimented with this, let me know what you learned.