I recently needed to ensure that a Power BI imported dataset would be refreshed after populating data in my data mart. I was already using Azure Data Factory to populate the data mart, so the most efficient thing to do was to call a pipeline at the end of my data load process to refresh the Power BI dataset.

Power BI offers REST APIs to programmatically refresh your data. For Data Factory to use them, you need to register an app (service principal) in AAD and give it the appropriate permissions in Power BI and to an Azure key vault.

I’m not the first to tackle this subject. Dave Ruijter has a great blog post with code and a step-by-step explanation of how to use Data Factory to refresh a Power BI dataset. I started with his code and added onto it. Before I jump into explaining my additions, let’s walk through the initial activities in the pipeline.

Before you can use this pipeline, you must have:

- an app registration in Azure AD with a secret

- a key vault that contains the Tenant ID, Client ID of your app registration, and the secret from your app registration as separate secrets.

- granted the data factory managed identity access to the keys in the key vault

- allowed service principals to use the Power BI REST APIs in in the Power BI tenant settings

- granted the service principal admin access to the workspace containing your dataset

For more information on these setup steps, read Dave’s post.

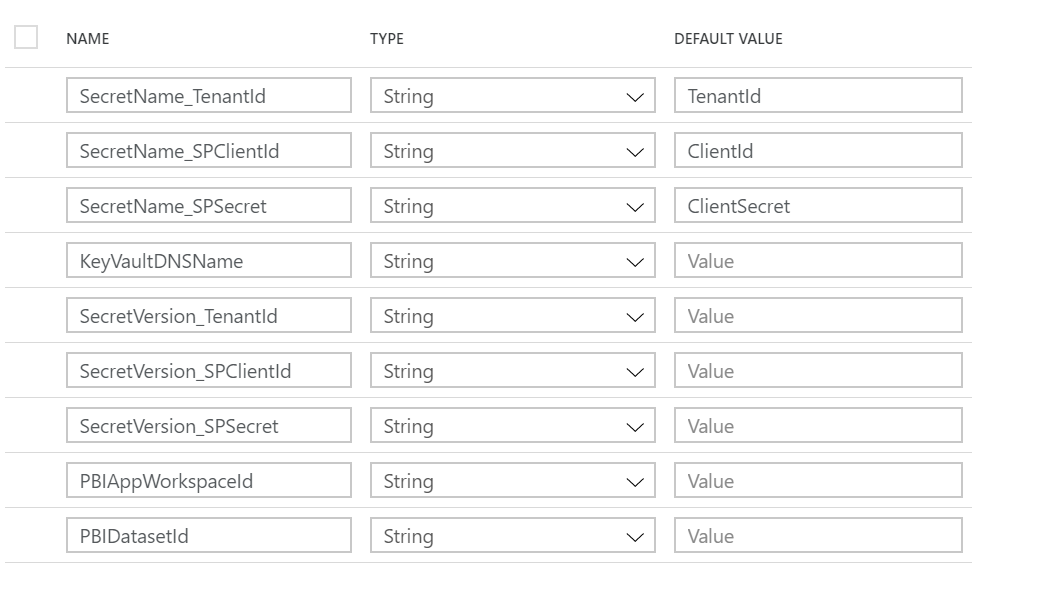

The pipeline contains several parameters that need to be populated for execution.

The first seven parameters are related to the key vault. The last two are related to Power BI. You need to provide the name and version of each of the three secrets in the key vault. The KeyVaultDNSName should be https://mykeyvaultname.vault.azure.net/ (replace mykeyvaultname with the actual name of your key vault). You can get your Power BI workspace ID and dataset ID from the url when you navigate to your dataset settings.

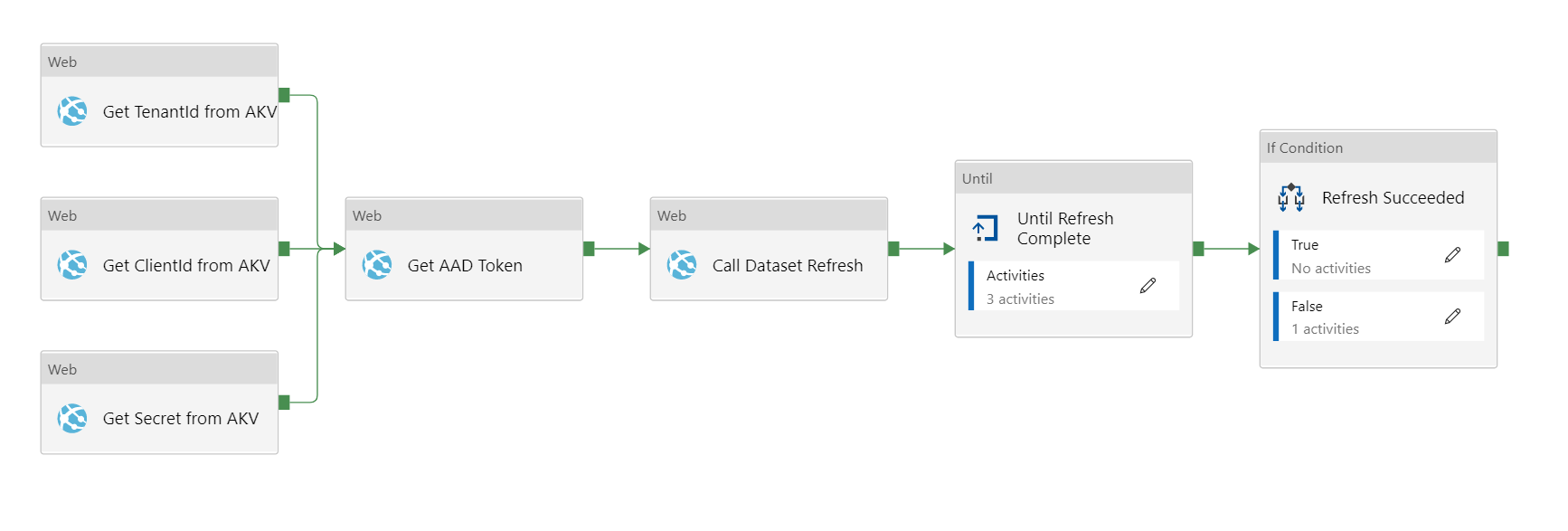

The “Get TenantId from AKV” activity retrieves the tenant ID from the key vault. The “Get ClientId from AKV” retrieves the Client ID from the key vault. The “Get Secret from AKV” activity retrieves the app registration secret from the key vault. Once all three of these activities have completed, Data Factory executes the “Get AAD Token” activity, which retrieves an auth token so we can make a call to the Power BI API.

One thing to note is that this pipeline relies on a specified version of each key vault secret. If you always want to use the current version, you can delete the SecretVersion_TenantID, SecretVersion_SPClientID, and SecretVersion_SPSecret parameters. Then change the expression used in the URL property in each of the three web activities .

For example, the URL to get the tenant ID is currently:

@concat(pipeline().parameters.KeyVaultDNSName,'secrets/',pipeline().parameters.SecretName_TenantId,'/',pipeline().parameters.SecretVersion_TenantId,'?api-version=7.0')

To always refer to the current version, remove the slash and the reference to the SecretVersion_TenantID parameter so it looks like this:

@concat(pipeline().parameters.KeyVaultDNSName,'secrets/',pipeline().parameters.SecretName_TenantId,'?api-version=7.0')

The “Call Dataset Refresh” activity is where we make the call to the Power BI API. It is doing a POST to https://api.powerbi.com/v1.0/myorg/groups/{groupId}/datasets/{datasetId}/refreshes and passes the previously obtained auth token in the header.

This is where the original pipeline ends and my additions begin.

Getting the Refresh Status

When you call the Power BI API to execute the data refresh, it is an asynchronous call. This means that the ADF activity will show success if the call is made successfully rather than waiting for the refresh to complete successfully.

We have to add a polling pattern to periodically check on the status of the refresh until it is complete.

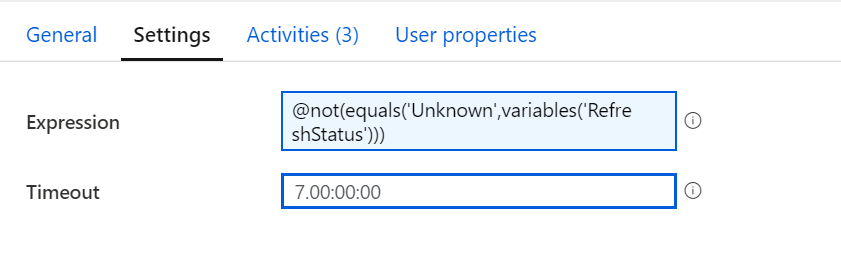

We start with an until activity. In the settings of the until loop, we set the expression so that the loop executes until the RefreshStatus variable is not equal to “Unknown”. (I added the RefreshStatus variable in my version of the pipeline with a default value of “Unknown”.) When a dataset is refreshing, “Unknown” is the status returned until it completes or fails.

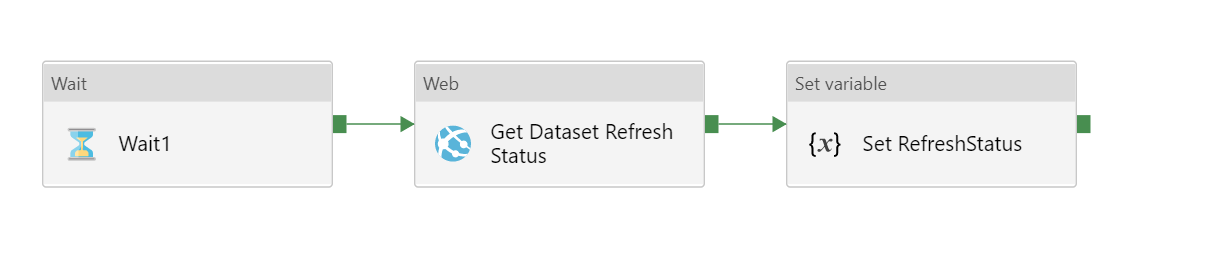

Inside of the “Until Refresh Complete” activity are three inner activities.

The “Wait1” activity gives the dataset refresh a chance to execute before we check the status. I have it configured to 30 seconds, but you can change that to suit your needs. Next we get the status of the refresh.

This web activity does a GET to the same url we used to start the dataset refresh, but it adds a parameter on the end.

https://docs.microsoft.com/en-us/resGET https://api.powerbi.com/v1.0/myorg/groups/{groupId}/datasets/{datasetId}/refreshes?$top={$top}

The API doesn’t accept a request ID for the newly initiated refresh, so we get the last initiated refresh by setting top equal to 1 and assume that is the refresh for which we want the status.

The API provides a JSON response containing an array called value with a property called status.

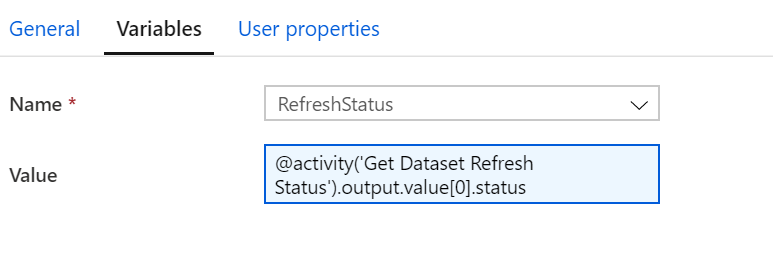

In the “Set RefreshStatus” activity, we retrieve the status value from the previous activity and set the value of the RefreshStatus variable to that value.

We want the status value in the first object in the value array.

The until activity then checks the value of the RefreshStatus variable. If your dataset refresh is complete, it will have a status of “Completed”. If it failed, the status returned will be “Failed”.

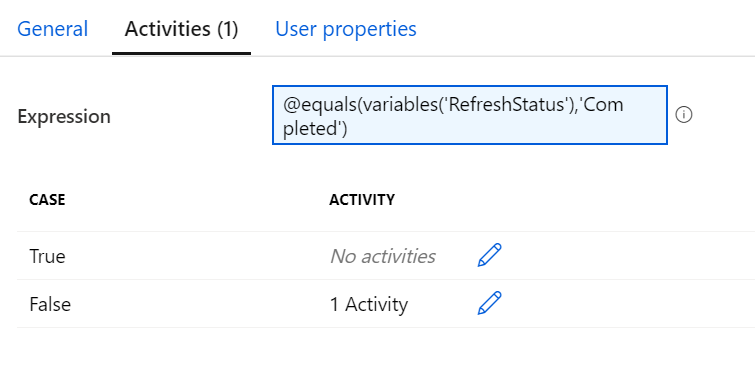

The If activity checks the refresh status.

If the refresh status is “Completed”, the pipeline execution is finished. If the pipeline activity isn’t “Completed”, then we can assume the refresh has failed. If the dataset refresh fails, we want the pipeline to fail.

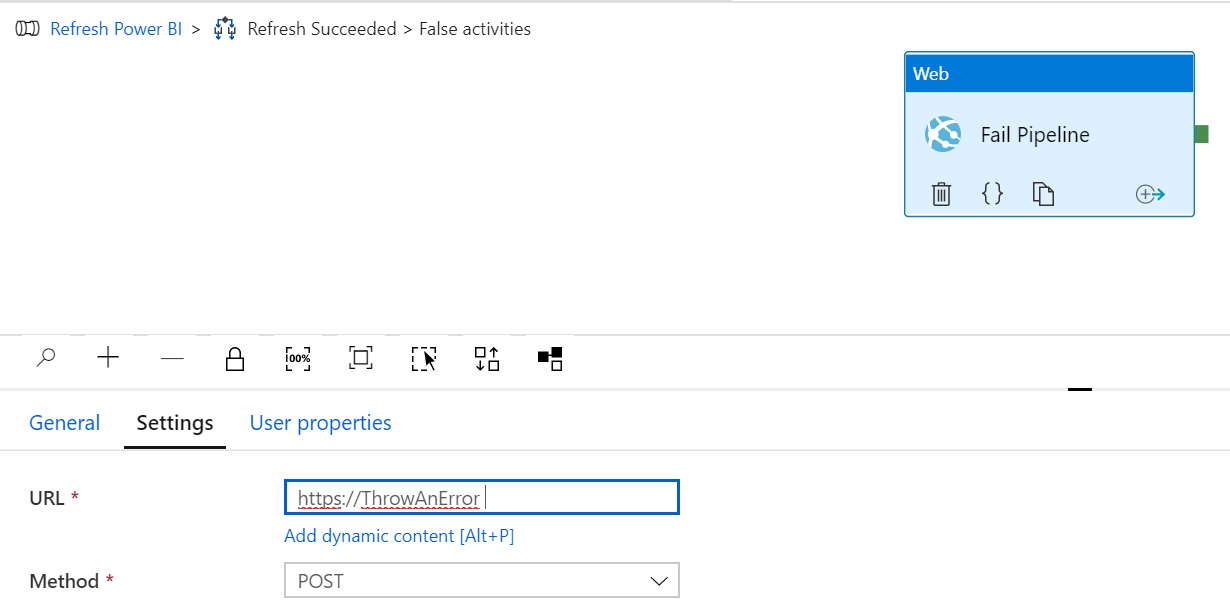

There isn’t a built-in way to cause the pipeline to fail so we use a web activity to throw a bad request.

We do a POST to an invalid URL. This causes the activity to fail, which then causes the pipeline to fail.

Since this pipeline has no dependencies on datasets or linked services, you can just grab my code from GitHub and use it in your data factory.